Model fitting, prediction, and evaluation

Model fitting

Model fitting is technically quite similar across the modeling methods

that exist in R. Most methods take a formula identifying the

dependent and independent variables, accompanied with a data.frame

that holds these variables. Details on specific methods are provided

further down on this document, in part III.

A simple formula could look like: y ~ x1 + x2 + x3, i.e., y is a

function of x1, x2, and x3. Another example is y ~ ., which means

that y is a function of all other variables in the data.frame

provided to the function. See help('formula') for more details about

the formula syntax. In the example below, the function glm is used

to fit generalized linear models. glm returns a model object.

Note that in the examples below, we are using the data.frame ‘sdmdata’

again. First read sdmdata from disk (we saved it at the end of the

previous chapter).

sdmdata <- readRDS("sdm.Rds")

presvals <- readRDS("pvals.Rds")

m1 <- glm(pb ~ bio1 + bio5 + bio12, data=sdmdata)

class(m1)

## [1] "glm" "lm"

summary(m1)

##

## Call:

## glm(formula = pb ~ bio1 + bio5 + bio12, data = sdmdata)

##

## Coefficients:

## Estimate Std. Error t value Pr(>|t|)

## (Intercept) 9.265e-02 9.729e-02 0.952 0.341341

## bio1 1.351e-03 3.890e-04 3.472 0.000554 ***

## bio5 -1.337e-03 4.495e-04 -2.973 0.003060 **

## bio12 1.437e-04 1.662e-05 8.648 < 2e-16 ***

## ---

## Signif. codes: 0 '***' 0.001 '**' 0.01 '*' 0.05 '.' 0.1 ' ' 1

##

## (Dispersion parameter for gaussian family taken to be 0.1158131)

##

## Null deviance: 94.156 on 615 degrees of freedom

## Residual deviance: 70.878 on 612 degrees of freedom

## AIC: 426.16

##

## Number of Fisher Scoring iterations: 2

m2 = glm(pb ~ ., data=sdmdata)

m2

##

## Call: glm(formula = pb ~ ., data = sdmdata)

##

## Coefficients:

## (Intercept) bio1 bio5 bio6 bio7 bio8

## 0.1844517 -0.0047289 0.0193746 -0.0155329 -0.0184310 0.0008068

## bio9 bio12 bio16 bio17

## NA 0.0004841 -0.0006574 -0.0008551

##

## Degrees of Freedom: 615 Total (i.e. Null); 607 Residual

## Null Deviance: 94.16

## Residual Deviance: 68.18 AIC: 412.2

Models that are implemented in the predicts package do not use a

formula (and most models only take presence points). The envelope

(a.k.a. “Bioclim”) is an example. It only uses presence data, so we use

‘presvals’ instead of ‘sdmdata’.

library(predicts)

## Loading required package: terra

## terra 1.8.23

bc <- envelope(presvals[,c("bio1", "bio5", "bio12")])

bc

## Formal class 'envelope_model' [package "predicts"] with 7 slots

## ..@ min : Named num [1:3] 150 206 372

## .. ..- attr(*, "names")= chr [1:3] "bio1" "bio5" "bio12"

## ..@ max : Named num [1:3] 282 351 7682

## .. ..- attr(*, "names")= chr [1:3] "bio1" "bio5" "bio12"

## ..@ factor : list()

## ..@ names : chr [1:3] "bio1" "bio5" "bio12"

## ..@ presence :'data.frame': 116 obs. of 3 variables:

## .. ..$ bio1 : num [1:116] 263 263 253 243 243 252 240 275 271 274 ...

## .. ..$ bio5 : num [1:116] 338 338 329 318 318 326 317 335 327 329 ...

## .. ..$ bio12: num [1:116] 1639 1639 3624 1693 1693 ...

## ..@ absence :'data.frame': 0 obs. of 0 variables

## Formal class 'data.frame' [package "methods"] with 4 slots

## .. .. ..@ .Data : list()

## .. .. ..@ names : chr(0)

## .. .. ..@ row.names: int(0)

## .. .. ..@ .S3Class : chr "data.frame"

## ..@ hasabsence: logi FALSE

Model prediction

Different modeling methods return different type of ‘model’ objects

(typically they have the same name as the modeling method used). All of

these ‘model’ objects, irrespective of their exact class, can be used to

with the predict function to make predictions for any combination of

values of the independent variables. This is illustrated in the example

below where we make predictions with the glm model object ‘m1’ and for

envelope model ‘bc’, for three records with values for variables bio1,

bio5 and bio12 (the variables used in the example above to create the

model objects).

bio1 <- c(40, 150, 200)

bio5 <- c(60, 115, 290)

bio12 <- c(600, 1600, 1700)

pd <- data.frame(cbind(bio1, bio5, bio12))

pd

## bio1 bio5 bio12

## 1 40 60 600

## 2 150 115 1600

## 3 200 290 1700

predict(m1, pd)

## 1 2 3

## 0.1527031 0.3714683 0.2194479

Making such predictions for a few environments can be very useful to

explore and understand model predictions. For example it used in the

response function that creates response plots for each variable,

with the other variables at their median value.

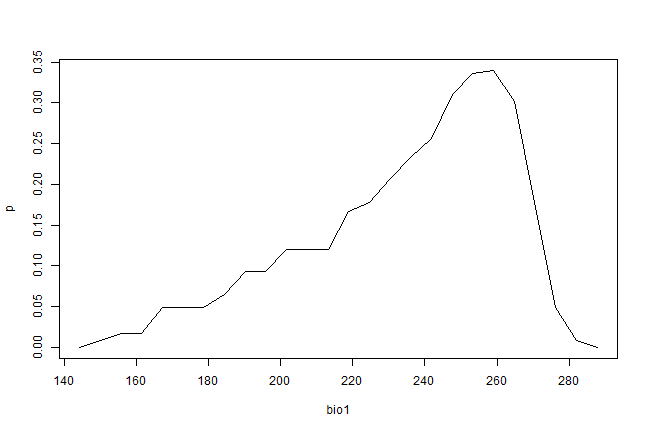

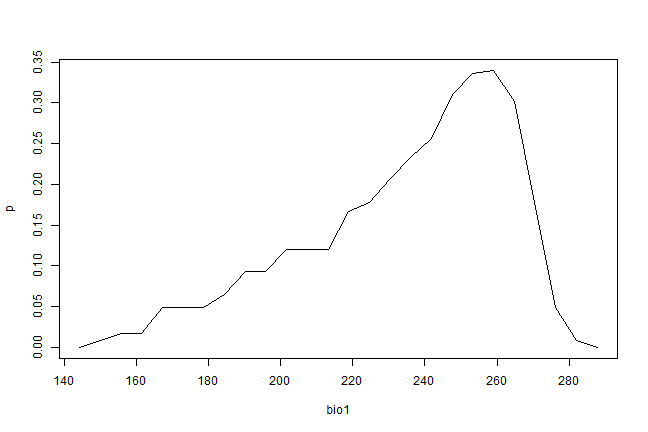

pr <- partialResponse(bc, presvals, "bio1")

plot(pr[[1]], type="l")

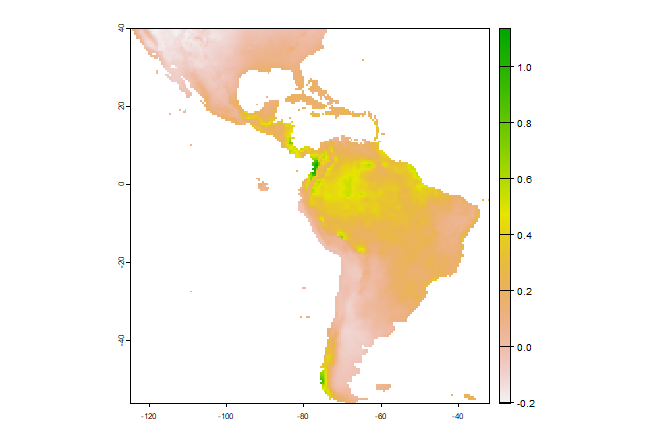

In most cases, howver, the purpose of SDM is to create a map of suitability scores. We can do that by providing the predict function with a Raster* object and a model object. As long as the variable names in the model object are available as layers in the SpatRaster object.

library(predicts)

f <- system.file("ex/bio.tif", package="predicts")

predictors <- rast(f)

names(predictors)

## [1] "bio1" "bio5" "bio6" "bio7" "bio8" "bio9" "bio12" "bio16" "bio17"

p <- predict(predictors, m1)

plot(p)

Model evaluation

It is much easier to create a model and make a prediction than to assess how good the model is, and whether it is can be used for a specific purpose. Most model types have different measures that can help to assess how good the model fits the data. It is worth becoming familiar with these and understanding their role, because they help you to assess whether there is anything substantially wrong with your model. Most statistics or machine learning texts will provide some details. For instance, for a GLM one can look at how much deviance is explained, whether there are patterns in the residuals, whether there are points with high leverage and so on. However, since many models are to be used for prediction, much evaluation is focused on how well the model predicts to points not used in model training (see following section on data partitioning). Before we start to give some examples of statistics used for this evaluation, it is worth considering what else can be done to evaluate a model. Useful questions include:

Does the model seem sensible, ecologically?

Do the fitted functions (the shapes of the modeled relationships) make sense?

Do the predictions seem reasonable? (map them, and think about them)?

Are there any spatial patterns in model residuals? (see Leathwick and Whitehead 2001 for an interesting example)

Most modelers rely on cross-validation. This consists of creating a model with one ‘training’ data set, and testing it with another data set of known occurrences. Typically, training and testing data are created through random sampling (without replacement) from a single data set. Only in a few cases, e.g. Elith et al., 2006, training and test data are from different sources and pre-defined.

Different measures can be used to evaluate the quality of a prediction (Fielding and Bell, 1997, Liu et al., 2011; and Potts and Elith (2006) for abundance data), perhaps depending on the goal of the study. Many measures for evaluating models based on presence-absence or presence-only data are ‘threshold dependent’. That means that a threshold must be set first (e.g., 0.5, though 0.5 is rarely a sensible choice – e.g. see Lui et al. 2005). Predicted values above that threshold indicate a prediction of ‘presence’, and values below the threshold indicate ‘absence’. Some measures emphasize the weight of false absences; others give more weight to false presences.

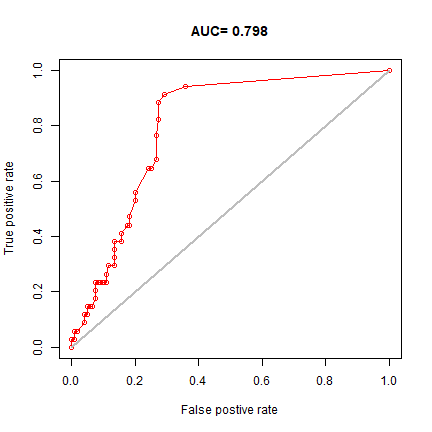

Much used statistics that are threshold independent are the correlation coefficient and the Area Under the Receiver Operator Curve (AUROC, generally further abbreviated to AUC). AUC is a measure of rank-correlation. In unbiased data, a high AUC indicates that sites with high predicted suitability values tend to be areas of known presence and locations with lower model prediction values tend to be areas where the species is not known to be present (absent or a random point). An AUC score of 0.5 means that the model is as good as a random guess. See Phillips et al. (2006) for a discussion on the use of AUC in the context of presence-only rather than presence/absence data.

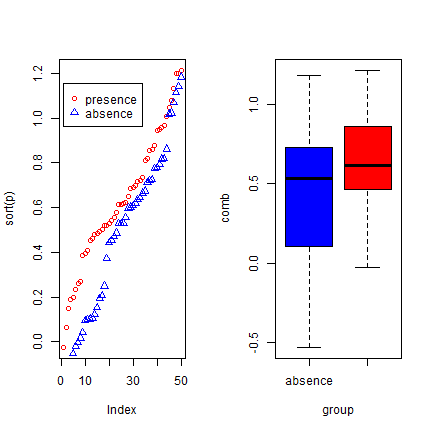

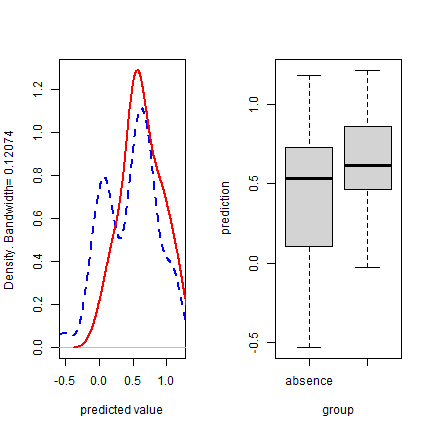

Here we illustrate the computation of the correlation coefficient and

AUC with two random variables. p (presence) has higher values, and

represents the predicted value for 50 known cases (locations) where the

species is present, and a (absence) has lower values, and represents

the predicted value for 50 known cases (locations) where the species is

absent.

p <- rnorm(50, mean=0.7, sd=0.3)

a <- rnorm(50, mean=0.4, sd=0.4)

par(mfrow=c(1, 2))

plot(sort(p), col='red', pch=21)

points(sort(a), col='blue', pch=24)

legend(1, 0.95 * max(a,p), c('presence', 'absence'),

pch=c(21,24), col=c('red', 'blue'))

comb <- c(p,a)

group <- c(rep('presence', length(p)), rep('absence', length(a)))

boxplot(comb~group, col=c('blue', 'red'))

We created two variables with random normally distributed values, but with different mean and standard deviation. The two variables clearly have different distributions, and the values for ‘presence’ tend to be higher than for ‘absence’. Here is how you can compute the correlation coefficient and the AUC:

group = c(rep(1, length(p)), rep(0, length(a)))

cor.test(comb, group)$estimate

## cor

## 0.5811097

mv <- wilcox.test(p,a)

auc <- as.numeric(mv$statistic) / (length(p) * length(a))

auc

## [1] 0.8324

Below we show how you can compute these, and other statistics more

conveniently, with the evaluate function in the predicts package.

See ?pa_evaluate for info on additional evaluation measures that are

available.

library(predicts)

e <- pa_evaluate(p=p, a=a)

class(e)

## [1] "paModelEvaluation"

## attr(,"package")

## [1] "predicts"

e

## @stats

## np na prevalence auc cor pcor ODP

## 1 50 50 0.5 0.832 0.581 0 0.5

##

## @thresholds

## max_kappa max_spec_sens no_omission equal_prevalence equal_sens_spec

## 1 0.406 0.406 -0.182 0.503 0.546

##

## @tr_stats

## treshold kappa CCR TPR TNR FPR FNR PPP NPP MCR OR

## 1 -0.6 0 0.5 1 0 1 0 0.5 NaN 0.5 NaN

## 2 -0.35 0.02 0.51 1 0.02 0.98 0 0.51 1 0.49 Inf

## 3 -0.3 0.04 0.52 1 0.04 0.96 0 0.51 1 0.48 Inf

## 4 ... ... ... ... ... ... ... ... ... ... ...

## 100 1.18 0.02 0.51 0.02 1 0 0.98 1 0.51 0.49 Inf

## 101 1.18 0 0.5 0 1 0 1 NaN 0.5 0.5 NaN

## 102 1.18 0 0.5 0 1 0 1 NaN 0.5 0.5 NaN

par(mfrow=c(1, 2))

plot(e, "density")

plot(e, "boxplot", col=c('blue', 'red'))

Back to some real data, presence-only in this case. We’ll divide the data in two random sets, one for training a Bioclim model, and one for evaluating the model.

samp <- sample(nrow(sdmdata), round(0.75 * nrow(sdmdata)))

traindata <- sdmdata[samp,]

traindata <- traindata[traindata[,1] == 1, 2:9]

testdata <- sdmdata[-samp,]

bc <- envelope(traindata)

e <- pa_evaluate(testdata[testdata==1,], testdata[testdata==0,], bc)

e

## @stats

## np na prevalence auc cor pcor ODP

## 1 28 126 0.182 0.806 0.386 0 0.818

##

## @thresholds

## max_kappa max_spec_sens no_omission equal_prevalence equal_sens_spec

## 1 0.034 0.034 0 0.182 0.114

##

## @tr_stats

## treshold kappa CCR TPR TNR FPR FNR PPP NPP MCR OR

## 1 0 0 0.18 1 0 1 0 0.18 NaN 0.82 NaN

## 2 0.01 0.33 0.67 0.93 0.61 0.39 0.07 0.35 0.97 0.33 20.43

## 3 0.02 0.33 0.68 0.93 0.62 0.38 0.07 0.35 0.98 0.32 21.12

## 4 ... ... ... ... ... ... ... ... ... ... ...

## 33 0.53 0.06 0.82 0.04 1 0 0.96 1 0.82 0.18 Inf

## 34 0.53 0 0.82 0 1 0 1 NaN 0.82 0.18 NaN

## 35 0.53 0 0.82 0 1 0 1 NaN 0.82 0.18 NaN

plot(e, "ROC")

In real projects, you would want to use k-fold data partitioning

instead of a single random sample. The predicts::kfold facilitates

that type of data partitioning. It creates a vector that assigns each

row in the data matrix to a a group (between 1 to k).

Let’s first create presence and background data.

pres <- sdmdata[sdmdata[,1] == 1, 2:9]

back <- sdmdata[sdmdata[,1] == 0, 2:9]

The background data will only be used for model testing and does not need to be partitioned. We now partition the data into 5 groups.

k <- 5

group <- folds(pres, k)

group[1:10]

## [1] 3 3 2 2 3 1 5 1 3 5

unique(group)

## [1] 3 2 1 5 4

table(group)

## group

## 1 2 3 4 5

## 23 23 24 23 23

Now we can fit and test our model five times. In each run, the records corresponding to one of the five groups is only used to evaluate the model, while the other four groups are only used to fit the model. The results are stored in a list called ‘e’.

e <- list()

for (i in 1:k) {

train <- pres[group != i,]

test <- pres[group == i,]

bc <- envelope(train)

p <- predict(bc, test)

a <- predict(bc, back)

e[[i]] <- pa_evaluate(p, a)

}

We can extract several things from list e, but let’s restrict

ourselves to the AUC values and the “maximum of the sum of the

sensitivity (true positive rate) and specificity (true negative rate)”

threshold “spec_sens” (this is sometimes uses as a threshold for setting

cells to presence or absence).

stats <- lapply(e, function(x){x@stats})

stats <- do.call(rbind, stats)

colMeans(stats)

## np na prevalence auc cor pcor

## 2.320000e+01 5.000000e+02 4.434195e-02 7.936935e-01 1.892217e-01 8.967517e-04

## ODP

## 9.556581e-01

The use of AUC in evaluating SDMs has been criticized (Lobo et al. 2008, Jiménez-Valverde 2011). A particularly sticky problem is that the values of AUC vary with the spatial extent used to select background points. Generally, the larger that extent, the higher the AUC value. Therefore, AUC values are generally biased and cannot be directly compared. Hijmans (2012) suggests that one could remove “spatial sorting bias” (the difference between the distance from testing-presence to training-presence and the distance from testing-absence to training-presence points) through “point-wise distance sampling”.

fsp <- system.file("/ex/bradypus.csv", package="predicts")

bradypus <- read.csv(fsp)

bradypus <- bradypus[,-1]

presvals <- extract(predictors, bradypus)

set.seed(0)

backgr <- spatSample(predictors, 500, na.rm=TRUE, xy=TRUE, values=FALSE)

nr <- nrow(bradypus)

s <- sample(nr, 0.25 * nr)

pres_train <- bradypus[-s, ]

pres_test <- bradypus[s, ]

nr <- nrow(backgr)

set.seed(9)

s <- sample(nr, 0.25 * nr)

back_train <- backgr[-s, ]

back_test <- backgr[s, ]

library(dismo)

## Loading required package: raster

## Loading required package: sp

##

## Attaching package: 'sp'

## The following object is masked from 'package:predicts':

##

## geometry

##

## Attaching package: 'dismo'

## The following objects are masked from 'package:predicts':

##

## mess, threshold

sb <- dismo::ssb(pres_test, back_test, pres_train)

sb[,1] / sb[,2]

## p

## 0.0909489

sb[,1] / sb[,2] is an indicator of spatial sorting bias (SSB). If

there is no SSB this value should be 1, in these data it is close to

zero, indicating that SSB is very strong. Let’s create a subsample in

which SSB is removed.

i <- pwd_sample(pres_test, back_test, pres_train, n=1, tr=0.1)

pres_test_pwd <- pres_test[!is.na(i[,1]), ]

back_test_pwd <- back_test[na.omit(as.vector(i)), ]

sb2 <- dismo::ssb(pres_test_pwd, back_test_pwd, pres_train)

sb2[1]/ sb2[2]

## [1] 1.001817

Spatial sorting bias is much reduced now; but notice how the AUC dropped.

bc <- envelope(predictors, pres_train)

ptst <- predict(bc, extract(predictors, pres_test))

btst <- predict(bc, extract(predictors, back_test))

pa_evaluate(ptst, btst)@stats

## np na prevalence auc cor pcor ODP

## 1 29 125 0.1883117 0.7657931 0.2612974 0.001063167 0.8116883

pwdptst <- predict(bc, extract(predictors, pres_test_pwd))

pwdbtst <- predict(bc, extract(predictors, back_test_pwd))

pa_evaluate(pwdptst, pwdbtst)@stats

## np na prevalence auc cor pcor ODP

## 1 14 14 0.5 0.4566327 -0.1513145 0.4421203 0.5